VideoDex: Learning Dexterity from Internet Videos

*Equal contribution, order decided by coin flip.

Abstract

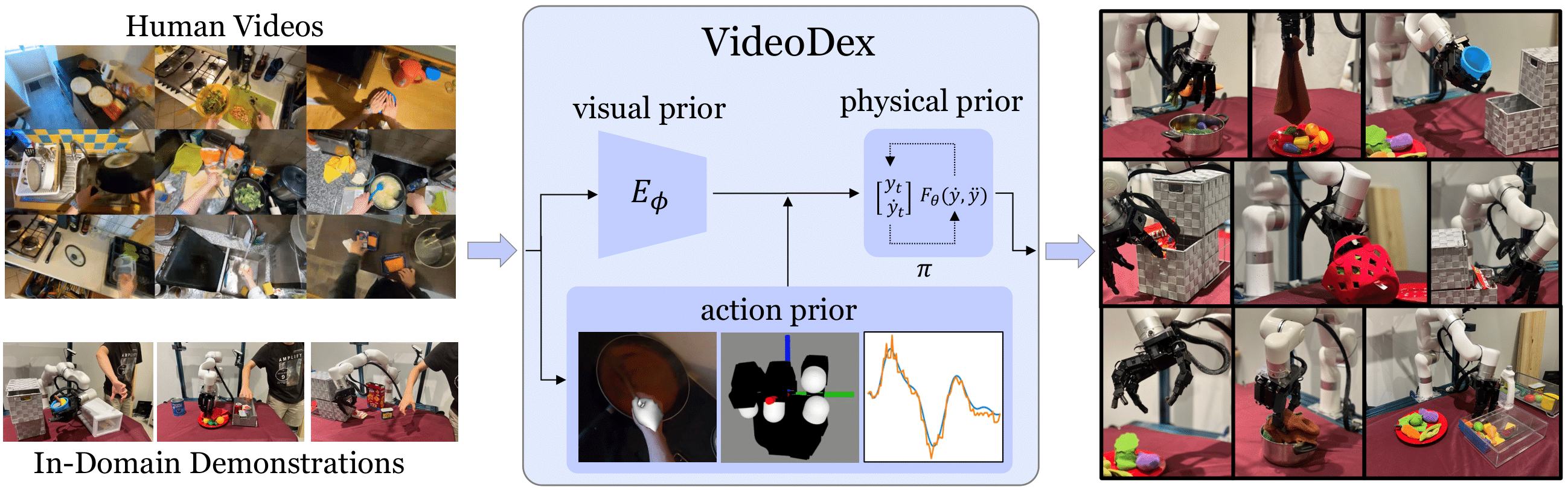

To build general robotic agents that can operate in many environments, it is imperative for the robot to collect experience in the real world. However, this is often not feasible due to safety, time and hardware restrictions. We thus propose leveraging the next best thing as real world experience: internet videos of humans using their hands. Visual priors, such as visual features, are often learned from videos, but we believe that more information from videos can be utilized as a stronger prior. We build a learning algorithm, VideoDex, that leverages visual, action and physical priors from human video data to guide robot behavior. These action and physical priors in the neural network dictate the typical human behavior for a particular robot task. We test our approach on a robot arm and dexterous hand based system and show strong results on many different manipulation tasks, outperforming various state-of-the-art methods.

1) Learn Priors from Internet Video:

To use internet videos as pseudo-robot experience, we re-target the 3D MANO human hand detections to 16 Degree of Freedom Robotic Allegro Hand and the wrist from the moving camera to the xArm6 emobodiment. The data is used as priors to pretrain the policies.

2) Collect a Few Teleoperated Demonstrations:

We quickly collect a few teleoperated demonstrations that to help bridge the gap between internet data and the robot embodiment.

3) Autonomous Results: